Purpose: The System Usability Scale (SUS) Score quantitatively measures the perceived usability of a product using a standardised 10-question survey.

Design Thinking Phase: Test

Time: 10-minute participant session + 1–2 hours analysis

Difficulty: ⭐⭐

When to use:When validating usability improvements after iterative design changesTo benchmark against usability norms across similar digital productsDuring A/B testing or after major redesigns to measure perception shifts

What it is

The SUS Score is a standardised questionnaire developed by John Brooke in 1986 to evaluate the usability of digital interfaces. It collects user responses to 10 Likert-scale items, producing a single score between 0–100 that reflects the overall perceived ease of use. It’s quick, reliable, and surprisingly diagnostic when combined with qualitative follow-ups.

📺 Video by NNgroup. Embedded for educational reference.

Why it matters

Quantifying usability can be tricky, especially when teams rely too heavily on anecdotal feedback or ambiguous metrics. SUS provides a consistent, low-cost way to gauge users' subjective experience, making it ideal for comparing designs, measuring trends over time, or catching post-launch regressions. For stakeholders, a single score offers clarity. For UX teams, the devil is in the distribution — where interpreting question-level insights reveals deeper issues.

When to use

- Near the end of usability testing to validate if issues were resolved

- To compare different versions of the same experience (e.g., pre vs post-redesign)

- When teams need a lightweight, reliable usability metric without recruiting a full research study

Benefits

- Rich Insights: Helps uncover user needs that aren’t visible in metrics.

- Flexibility: Works across various project types and timelines.

- User Empathy: Deepens understanding of behaviours and motivations.

How to use it

Follow this process to run and interpret a SUS-based test:

- Recruit 5–10 participants who fit your user persona.

- After completing a core interaction or task journey, ask each user to complete the 10-question SUS questionnaire.

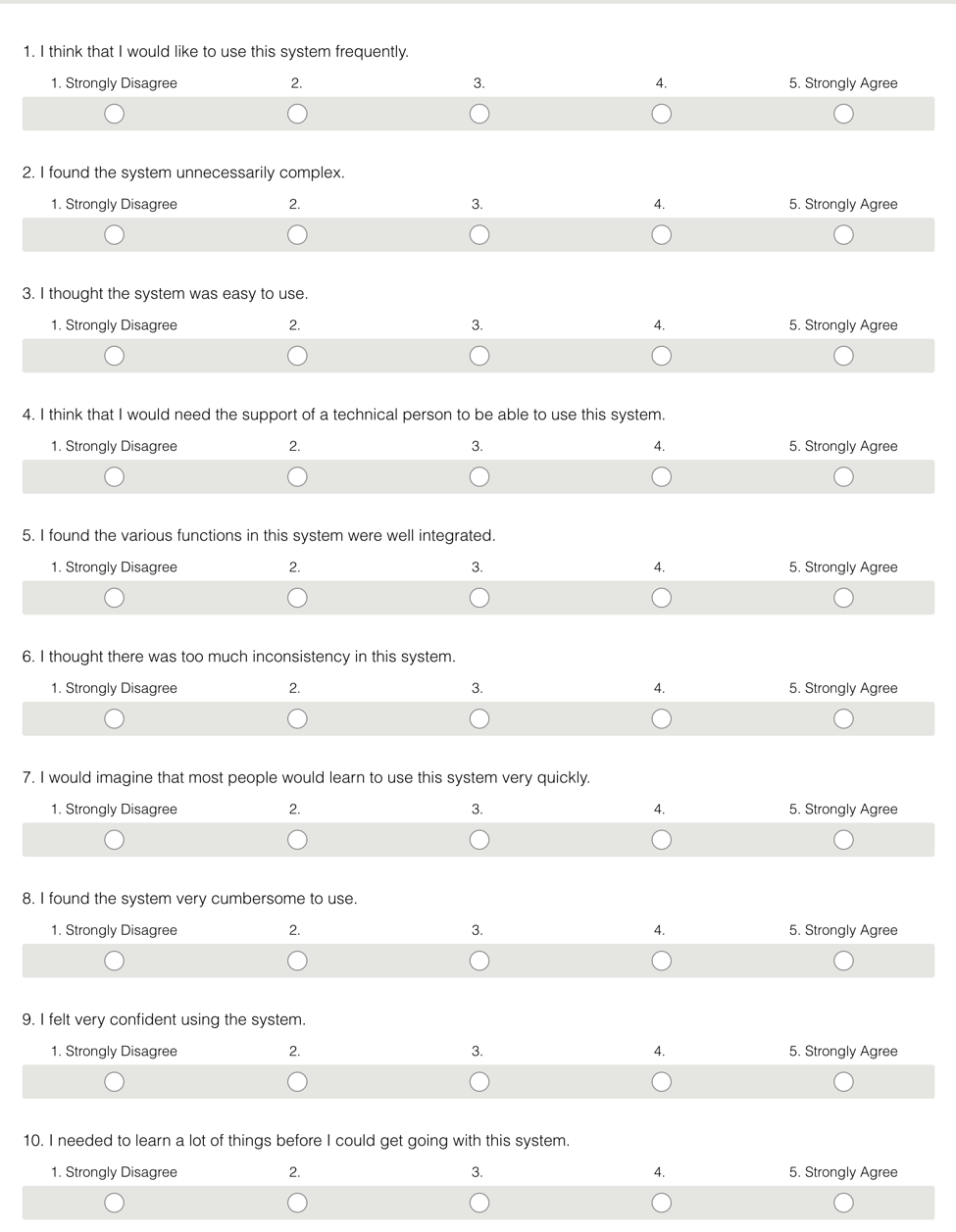

- Here are the 10 template questions which you can adapt to suit your website:

- I think that I would like to use this system frequently.

- I found the system unnecessarily complex.

- I thought the system was easy to use.

- I think that I would need the support of a technical person to be able to use this system.

- I found the various functions in this system were well integrated.

- I thought there was too much inconsistency in this system.

- I would imagine that most people would learn to use this system very quickly.

- I found the system very cumbersome to use.

- I felt very confident using the system.

- I needed to learn a lot of things before I could get going with this system.

- Here are the 10 template questions which you can adapt to suit your website:

- Calculate the raw score: For odd-numbered questions subtract 1 from the user’s score (1–5 scale). For even-numbered questions, subtract the response from 5. Sum the new values and multiply the total by 2.5 to yield a score out of 100.

- Compare scores to industry benchmarks. A score over 68 is above average; under 50 needs attention.

- Optional diagnostic: Review individual question deltas and note clusters of negative sentiment.

Example Output

During testing of an updated mobile checkout flow, 7 participants completed SUS scoring:

- Average Score: 72.1

- Lowest individual score: 55 (attr. to unclear payment step)

- Participants 5 and 6 rated “The system was unnecessarily complex” as a 4 — prompting follow-up

Common Pitfalls

- Overreliance on the average: Mean scores can mask severe outliers. Always look at the spread.

- No follow-up probing: The SUS score gives you a ‘what’ — not a ‘why’. Combine with interviews or written feedback.

- Misuse on unfinished designs: SUS is meant for relatively complete flows. Don’t apply to half-built prototypes or missing-critical-function tasks.

10 Design-Ready AI Prompts for SUS Score – UX/UI Edition

How These Prompts Work (C.S.I.R. Framework)

Each of the templates below follows the C.S.I.R. method — a proven structure for writing clear, effective prompts that get better results from ChatGPT, Claude, Copilot, or any other LLM.

C.S.I.R. stands for:

- Context: Who you are and the UX situation you're working in

- Specific Info: Key design inputs, tasks, or constraints the AI should consider

- Intent: What you want the AI to help you achieve

- Response Format: The structure or format you want the AI to return (e.g. checklist, table, journey map)

Level up your career with smarter AI prompts.Get templates used by UX leaders — no guesswork, just results.Design faster, research smarter, and ship with confidence.First one’s free. Unlock all 10 by becoming a member.

Prompt Template 1: “Summarise SUS Score Patterns Across Test Participants”

Summarise SUS Score Patterns Across Test Participants

Context: You are a UX Researcher reviewing SUS scores collected from a usability test with [X participants].

Specific Info: Each participant completed the 10-question SUS questionnaire after testing the [product or feature], and you've tabulated raw scores per question.

Intent: Identify patterns across question-level responses and suggest follow-up areas for qualitative exploration.

Response Format: Return a table showing top 3 positive and negative scoring questions, summary insights, and 2–3 follow-up research suggestions.

If question wording or context is missing, ask for that before proceeding.

Then, list one angle where stakeholder alignment should be explored.

Prompt Template 2: “Diagnose Low SUS Scores Using Correlated Feedback”

Diagnose Low SUS Scores Using Correlated Feedback

Context: You’re a UX Lead reviewing a set of low SUS scores after a shipping release for [feature/product].

Specific Info: You have both SUS scores and users’ open-ended comments during follow-up interviews.

Intent: Link low scoring areas to specific user language and surface key usability bottlenecks.

Response Format: Return a list of 3–5 potential root issues, each paired with associated user quotes.

Ask for sample SUS question responses and user notes before supplying final output.

Suggest a prioritisation method for surfacing critical issues from these patterns.

Prompt Template 3: “Create Benchmarks from Prior SUS Testing Rounds”

Create Benchmarks from Prior SUS Testing Rounds

Context: You’re a DesignOps researcher consolidating three rounds of quarterly SUS testing.

Specific Info: Each round tested [same core user flow] but with design updates applied.

Intent: Generate a benchmarking report to track progress and regression.

Response Format: Return a comparison table with columns for test round, average SUS score, score change vs previous, and diagnostic notes.

Ask if testing sample size changed across iterations and normalise accordingly.

Suggest one visualisation to present this to non-research stakeholders.

Prompt Template 4: “Draft Clear SUS Follow-Up Interview Questions”

Draft Clear SUS Follow-Up Interview Questions

Context: You’re a UX Researcher preparing follow-up interviews after collecting SUS survey data.

Specific Info: Some questions scored particularly low (e.g. Q2, Q8), but you need to probe further.

Intent: Elicit qualitative explanations from users on why they rated certain items poorly.

Response Format: Create 5–7 open-ended, neutral, behaviourally focused questions aligned with the SUS framework.

Ask which questions underperformed before refining the script.

Suggest one strategy to link responses back into design recommendations.

Prompt Template 5: “Generate SUS-Adapted Questions for Mobile-First Apps”

Generate SUS-Adapted Questions for Mobile-First Apps

Context: You’re redesigning the usability study for a mobile-first productivity app.

Specific Info: Participants had difficulty understanding task progress and navigation, affecting perceived ease of use.

Intent: Create a modified set of usability perception questions aligned with SUS but tailored for mobile contexts.

Response Format: Return 8–10 revised questions in Likert format with notes on how they deviate from canonical SUS.

Ask what specific mobile features or gestures caused friction.

Then, suggest if a mixed-method study would better validate usability perception.

Prompt Template 6: “Compare SUS Results Between Personas”

Compare SUS Results Between Personas

Context: You’re reviewing SUS data split across two key personas ([Persona A] and [Persona B]).

Specific Info: Each persona group completed the same core task and survey.

Intent: Identify usability perception differences between personas.

Response Format: Return a comparison matrix with average scores per persona, top questions with delta differences, and design takeaways.

Ask for persona key traits and sample summaries.

Indicate what kinds of usability solutions might disproportionately benefit one group.

Prompt Template 7: “Write a Stakeholder Summary of SUS Findings”

Write a Stakeholder Summary of SUS Findings

Context: You’re preparing a summary report of SUS results for product leadership.

Specific Info: Testing was done on a newly released [feature or flow], and scores were lower than expected.

Intent: Communicate findings in non-technical language while retaining critical insight.

Response Format: A concise 3-paragraph summary including context, key findings, and recommended next steps.

Ask for exact SUS figures and notable quotes first.

Then suggest one visual format to pair with the summary (e.g. radar graph, heatmap).

Prompt Template 8: “Draft an Internal SUS Protocol for Design Teams”

Draft an Internal SUS Protocol for Design Teams

Context: You’re a DesignOps Manager standardising usability testing processes across squads.

Specific Info: You want each team to use the SUS scale consistently post-feature builds.

Intent: Create a one-pager protocol outlining when to use SUS, how to structure the sessions, and how to store/share the data.

Response Format: Return a checklist + short how-to guide (under 300 words).

Ask for team maturity, tools in use, and any prior methods.

Offer a template teams can clone into Notion or Confluence.

Prompt Template 9: “Predict Usability Risks from SUS Sentiment Trends”

Predict Usability Risks from SUS Sentiment Trends

Context: You’re part of a ResearchOps team running sentiment tracking using SUS over time.

Specific Info: You’ve spotted downward sentiment in Q4 and Q7 across three releases.

Intent: Identify emerging usability risks affecting satisfaction or task confidence.

Response Format: A short risk matrix of top concerns, severity, and user quotes suggesting growing dissatisfaction.

Ask how task design changed during release cycles.

Recommend which risks need fast intervention vs monitoring.

Prompt Template 10: “Write UX Insight Cards from SUS Survey Results”

Write UX Insight Cards from SUS Survey Results

Context: You’re turning SUS-based research into shareable reference artefacts for cross-functional teams.

Specific Info: Scores revealed a gap in perceived intuitive navigation and consistency.

Intent: Create UX insight cards summarising results with context and design recommendations.

Response Format: List 3–4 cards with title, insight, short quote, and next step.

Ask how teams currently share research internally.

Suggest formats for design system integration.

Recommended Tools

- Maze – Quick SUS testing integration

- Lookback – For pairing SUS surveys with behavioural replay

- Paste.app or Notion – Document and share insight cards post-analysis

- Excel or Google Sheets – Manual score conversion and basic analysis templates

Learn More